Passing through a GPU on Unraid is usually a pretty easy task. Issues are easily solved as long as it’s in one of the “GPU” / main PCIe slots on most motherboards. There are some issues using it in the primary slot which are easily overcome. I discussed in my unRAID Pascal GPU Passthrough guide. However, for more advanced setups, you’ll need to start passing through other PCIe devices to virtual machines. This starts to get a bit more tricky and the many guides have some shot-gun approaches to solving the problems associated with these advanced setups. In order to maximize system stability, you’ll want to avoid these shot-gun approaches and the pitfalls associated with them. In this post I’ll give you a basic understanding of PCIe and IOMMU groups. Then discuss one of the more commonly touted “solutions” and the pitfalls associated with it.

Memory and IOMMUs

In order to make the basic information as accessible as possible, I’ll try to keep these descriptions at a high level and try to abstract many of the details. At a basic level, though, the host OS is running on all of the physical hardware and so operates as a normal machine. This means that its kernel manages the CPU and the CPU interfaces with memory through a memory management unit (MMU). The kernel of the host OS works with this MMU to create virtual memory spaces for all user programs (and even guest OS). This MMU translates the virtual addresses of the user program / virtual machine in the CPU into physical addresses that are physically located in RAM.

RAM is not the only “physical” memory address, however. Most devices connected to the CPU are actually mapped into memory addresses similar to RAM. These input / output (IO) devices will then have their own MMU, or an IOMMU, similar to how main memory does. This physical hardware is especially important for high speed communication devices, as features such as data transfers can be set-up by the CPU, and then offloaded to these MMUs to handle on their own. This is called direct-memory access (DMA).

Even though these IOMMUs can talk to each other through DMA directly, not every device has its own management unit that can perform the translation from physical memory space to the device itself. Since the host OS creates virtual memory spaces for each guest OS, it is set up to address and treat each group of devices “underneath” an IOMMU (an IOMMU group) as the smallest set of devices that can be isolated from other groups. This means that any device downstream of an IOMMU is not aware and is not separated from other devices within this group.

PCIe and ACS

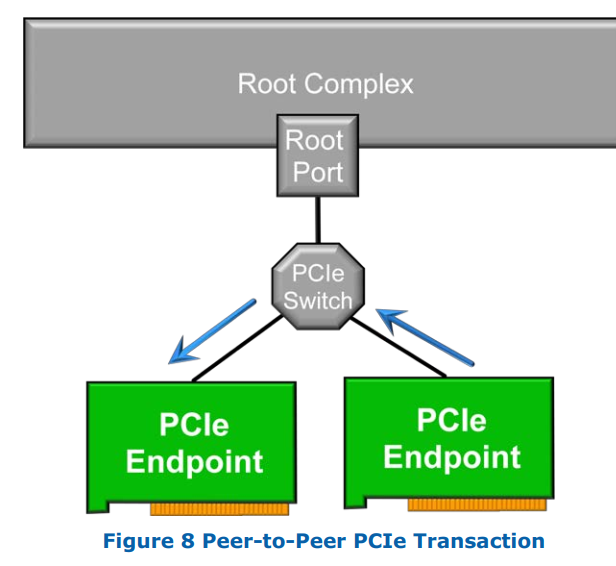

PCIe adds another layer of complication to this as it allows any PCIe devices connected within an IOMMU group to talk to each other directly. Called peer-to-peer transfers, these transactions can bypass the IOMMU, PCIe root complex, and the CPU, and therefore are completely ignorant of any virtualization. This breaks down any attempted isolation.

From a top-down approach, this won’t cause issues with translation from tasks between IOMMU groups or from the CPU, for example. The PCIe root complex(es) and other devices (for example PCIe switches) provide a feature called Access Control Services (ACS) that prevent unauthorized peer-to-peer transactions from occurring. This generally means that IOMMU groups must be atomic and uniquely assigned to one virtualized memory space. Effectively, this means that an IOMMU group cannot be broken up, and the entire group of devices must be assigned to ONE and only one OS (either the host or any one guest OS).

ACS Override

Most consumer motherboard architectures are not designed to run virtualization to the fullest extent even if the CPUs they’re designed to run support features (e.g. VT-d for Intel). As a result, many of their chipsets, or downstream PCIe switches are not equipped with ACS, for example, and therefore many devices are grouped together into a single large IOMMU group.

When people are usually experimenting with Unraid, KVM, etc. many are using these consumer motherboards and chipsets. If their goal is to then assign a new PCIe device to a virtual machine, it will often end up within an IOMMU group, rather than one on its own. Often, this is the main chipset’s IOMMU group, which means assigning it to a VM will take away most if not all functionality from the host OS! With Unraid, this often means removing the ability for it to talk to disks, making it all but useless.

To solve this problem most forums will give the advice to inexperienced users to disable the ACS feature in the Linux kernel (called ACS override). This will make (nearly) every PCIe device that is downstream of an ACS-equipped switch / device, etc. appear as if it is in its own IOMMU group. This allows you to “separate” a device from the rest of the group in order to assign it to a guest OS. From a top-down view, this doesn’t seem like much of a problem, since the CPU’s transactions will be translated through the IOMMU and get to the right device.

Pitfalls

However, this commonly recommended “solution” comes at the expense of system stability and security. Remember, this feature protects unauthorized / errant transactions between devices. There are two scenarios that demonstrate how ACS Override will affect system stability when split up and assigned to two different OS:

- Two GPUs from the same manufacturer are part of the same physical IOMMU group.

- Two different endpoints of a single PCIe device (a multifunction device).

In the case of the GPUs, the host’s driver (Linux in this case) could see both GPUs under the IOMMU group and initialize both. When the guest OS’s driver loads, it also might see both and try to communicate with it. Since these drivers are different, they won’t be able to properly communicate with the devices, and possibly crash.

In the case of a multifunction device, the endpoints could be completely different devices from what is expected. A guest OS’s virtual memory space for its endpoint device could end up mapping to (have the same address of) another PCIe device endpoint that is assigned to the host’s OS. For example, a guest OS could have a USB controller from the chipset given to it, and the host OS still has the SATA storage controller given to it. The guest’s USB controller might have the same virtual address as the REAL physical address of the SATA controller, causing the USB transactions of the guest OS to bypass ACS due to the override. If this happens, it would corrupt the host’s transactions to the SATA controller, causing data corruption or crashing the host OS.

Conclusion

Now that I’ve given you a little bit of a background about what IOMMU groups are, how they relate to PCIe, virtualization, and pass-through we can understand the basic pitfalls associated with commonly suggested “solutions”. In many cases, people are just trying to experiment and tinker, where system stability is not their first thought. If and when this becomes critical to your installation / use-case, it’s important to understand how to setup and configure your system to avoid stability issues at all costs.

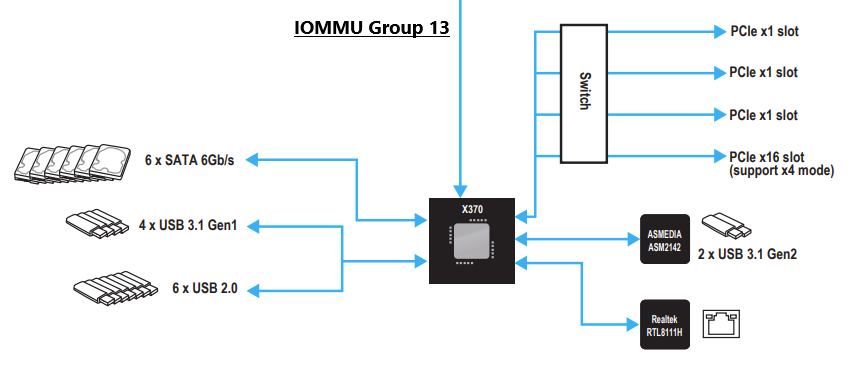

The biggest take-away from this is that it’s important to put some forethought into the use case you have specifically, the requirements of the VMs, and the hardware components you’re using in your installation. To that end, I’ll explore what considerations you’ll want to make when selecting hardware so that you can avoid these pitfalls in a future post where I’ll dig deeper into an X370 motherboard.

References

If you’d like to read a bit more on the subject:

- https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/sect-iommu-deep-dive

- https://mathiashueber.com/pci-passthrough-ubuntu-2004-virtual-machine/

- https://forum.level1techs.com/t/using-acs-to-passthrough-devices-without-whole-iommu-group/122913/4

- http://vfio.blogspot.com/2014/08/iommu-groups-inside-and-out.html